Unlock RemoteIoT Data Power: AWS Batch Job Guide

In a world awash in data, how can businesses harness the power of information from remote environments to drive innovation and efficiency? The answer lies in the sophisticated orchestration of RemoteIoT batch jobs on Amazon Web Services (AWS).

Data has become the lifeblood of modern enterprises. The ability to process it swiftly, accurately, and cost-effectively is no longer a luxury but a necessity. AWS stands at the forefront of this data revolution, offering a comprehensive suite of tools designed to streamline complex batch processing workflows. From the humble beginnings of IoT devices to the vast expanse of massive datasets, AWS ensures that batch jobs are executed with precision and efficiency. This empowers organizations to confidently manage their data, unlocking its potential to inform critical business decisions.

This guide is crafted to demystify the complexities involved in setting up and managing RemoteIoT batch jobs using AWS. It aims to provide developers and IT professionals with the knowledge and strategies needed to optimize their batch processing capabilities, ultimately improving performance and controlling costs. Whether you are managing a network of sensors in a remote agricultural field or analyzing data streams from a global network of industrial devices, AWS offers the scalability and flexibility to meet your needs. The following information provides an overview of essential tools, best practices, and actionable strategies.

- Movierulz Kannada Options Risks Legal Alternatives Your Guide

- Carey Lowell A Deep Dive Into Her Career Life Latest Updates

Key Concepts

Understanding RemoteIoT batch jobs in the context of AWS requires grasping several key concepts. These include the following items:

- Remote IoT: This refers to the deployment of Internet of Things (IoT) devices and technologies in remote environments.

- Batch Processing: This is a method of processing data in large, discrete groups (batches), often at scheduled intervals.

- AWS Batch: A fully managed service designed to execute batch computing workloads on the AWS Cloud.

- Compute Environment: Defines the compute resources (e.g., EC2 instances) allocated to run batch jobs.

- Job Queue: Serves as a container for batch jobs, managing their scheduling and priority.

These concepts will be explored in more detail throughout this guide. Remember, the successful implementation of RemoteIoT batch processing relies on a solid understanding of these components and how they interact within the AWS ecosystem.

- Unveiling The Life Of Nick Fotius Wife A Closer Look

- Jennifer Brinson From Small Town To Rising Star Amp Beyond

Before diving deep, let's clarify some aspects of the basic infrastructure, that will make your processing of information an easier process.

Introduction to AWS Batch

AWS Batch emerges as a crucial element in the landscape of cloud computing, offering a fully managed service that simplifies the execution of batch computing workloads on the AWS Cloud. Its significance lies in its ability to handle large-scale data processing tasks efficiently and cost-effectively.

Key Features of AWS Batch

- Dynamic Resource Provisioning: AWS Batch dynamically provisions compute resources (such as EC2 instances or Fargate containers) based on the needs of your batch jobs. This ensures that you have the necessary resources available when you need them, without the need to manually manage infrastructure.

- Integration with AWS Services: It seamlessly integrates with other AWS services like Amazon EC2, AWS Fargate, Amazon S3, and AWS CloudWatch, offering a comprehensive solution for batch processing.

- Container Support: AWS Batch supports both Docker containers and traditional applications, providing flexibility in the types of workloads you can run. You can package your applications and their dependencies into containers, ensuring consistent execution across different environments.

- Job Scheduling: AWS Batch provides flexible scheduling options, allowing you to define when and how your batch jobs run. You can schedule jobs based on time, dependencies, or other criteria.

AWS Batch is particularly advantageous for RemoteIoT batch job processing. It offers the scalability and flexibility needed to handle the extensive data processing tasks. As the volume of data generated by RemoteIoT devices grows, AWS Batch can seamlessly scale resources to keep up with the demand.

Understanding RemoteIoT Batch Jobs

What is RemoteIoT?

RemoteIoT involves deploying Internet of Things (IoT) devices and technologies in remote environments. These devices generate large amounts of data that require batch processing to derive actionable insights. Consider a scenario in a remote agricultural field, where the sensors continuously collect data on soil moisture, temperature, and humidity levels.

Why use batch processing for RemoteIoT?

Batch processing offers a controlled and efficient approach, making it well-suited for RemoteIoT applications, which is capable of processing large datasets. Consider that batch processing can accommodate delays and interruptions, a quality which makes it highly reliable for remote settings where connectivity is an issue.

Advantages of Batch Processing for RemoteIoT

- Enhanced Data Accuracy and Reliability: Batch processing allows for the data to be validated, and cleaned before processing, which increases the accuracy of the analysis.

- Significant Reduction in Processing Costs: Batch processing allows for the optimized use of compute resources, which minimizes processing costs.

- Improved Scalability to Accommodate Growing Data Volumes: As more IoT devices are deployed and data volume grows, batch processing solutions can be scaled.

For this reason, batch processing is an ideal solution for processing data from remote IoT devices.

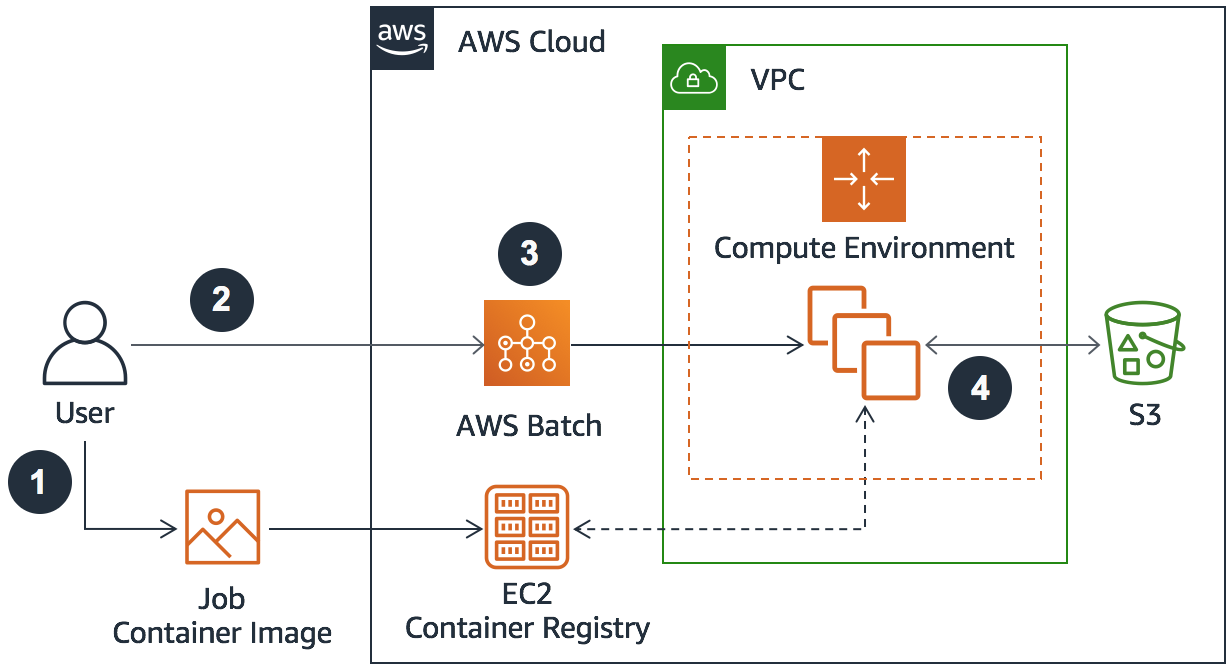

AWS Remote Batch Job Example

Let's delve into a practical example of a RemoteIoT batch job in AWS. The scenario involves managing a network of IoT devices in a remote agricultural field. These devices continuously collect data on soil moisture, temperature, and humidity levels. By using AWS Batch, you can automate this entire workflow, ensuring that the data is processed consistently and efficiently.

AWS Batch Job Tasks

- Data Aggregation: Collecting data from multiple devices into a single dataset. This step involves retrieving the data from various data sources and combining it into a unified format.

- Data Cleaning and Preprocessing: Cleaning and preprocessing the data to eliminate inconsistencies. The cleaning process includes tasks like handling missing values, removing outliers, and standardizing data formats.

- Anomaly Detection: Identifying anomalies and generating alerts for potential issues. This involves analyzing the data for unusual patterns or deviations.

- Data Storage: Storing the processed data securely in Amazon S3 for future use. Amazon S3 provides a secure and scalable storage solution, ensuring the data is accessible and protected.

This end-to-end process demonstrates the power and flexibility of AWS Batch in handling complex RemoteIoT data processing tasks. Automating the workflow ensures that the data is processed consistently and efficiently, providing you with the insights to make informed decisions.

Setting Up AWS Remote Batch

Step 1

A compute environment is the foundation of AWS Batch. It defines the resources allocated for running your batch jobs. The compute environment is the core component that determines the resources your batch jobs will use.

Steps

- Sign in to the AWS Management Console: Access the AWS Management Console using your AWS account credentials.

- Access the AWS Batch Service: Navigate to the AWS Batch service, which can be found in the AWS Management Console.

- Select "Compute Environments" and choose "Create": In the AWS Batch console, go to the "Compute Environments" section and initiate the creation process.

- Specify Compute Resources: Define the compute resources required for your workload. Specify the instance types.

Step 2

A job queue serves as a container for your batch jobs, which is crucial for organizing and managing your batch tasks. It acts as a central point for submitting, scheduling, and monitoring your jobs.

Steps

- Go to the "Job Queues" Section: In the AWS Batch console, locate the "Job Queues" section.

- Create and Configure: Initiate the creation of a new job queue and configure the settings. This includes specifying the queue name, priority, and other relevant details.

- Associate with Compute Environment: Link the job queue with the compute environment you created earlier. This association ensures that the jobs submitted to the queue can access the resources defined in the compute environment.

Step 3

Submitting a batch job is the final step in launching your data processing tasks. This step involves defining the job, specifying its properties, and providing the necessary input data. By submitting a batch job, you trigger the execution of your workload within the AWS Batch environment.

Steps

- Define Job: Define the job definition. This includes specifying the container properties.

- Specify Job Properties: Define the properties for your job, such as the job name, the job queue, the compute environment to be used, and any other relevant settings.

- Provide Input Data: Provide the input data. Specify the location of the input data that your batch job needs to process.

Tools for RemoteIoT Batch Processing

AWS provides a diverse set of tools and services to enhance RemoteIoT batch processing capabilities. These tools can be integrated with AWS Batch to create a robust and flexible processing pipeline for RemoteIoT applications. The following is a list of relevant AWS services and how they can be used within the broader context of batch processing.

- AWS Lambda: AWS Lambda is a compute service that lets you run code without provisioning or managing servers. For RemoteIoT batch processing, Lambda can be ideal for lightweight, serverless data processing tasks. You can use Lambda to transform or aggregate data from IoT devices. It is particularly useful for tasks such as data filtering, aggregation, and real-time analysis.

- Amazon S3: Amazon S3 is a highly scalable object storage service. It provides secure and scalable storage solutions for your data. For RemoteIoT batch processing, S3 can be used to store the raw data. The service can store processed data, enabling you to keep data secure, accessible, and cost-effective.

- AWS Glue: AWS Glue is a serverless data integration service that simplifies ETL (Extract, Transform, Load) operations. In the context of RemoteIoT, Glue facilitates the ETL operations needed to streamline data processing. This allows data to be cleansed, transformed, and prepared for analysis.

- AWS Step Functions: AWS Step Functions is a serverless orchestration service that lets you coordinate multiple AWS services. It enables the orchestration of complex workflows, ensuring smooth execution of your batch processing pipelines. It coordinates the execution of multiple steps in your data processing workflow.

Integrating these tools with AWS Batch allows for the construction of a robust and flexible batch processing pipeline tailored to your RemoteIoT applications. The various tools can be leveraged to create a scalable and efficient solution.

Optimizing RemoteIoT Batch Jobs

Optimizing RemoteIoT batch jobs involves fine-tuning various parameters to maximize performance and minimize costs. The following strategies can be implemented to ensure that RemoteIoT batch jobs operate at peak efficiency while maintaining cost-effectiveness.

- Leverage Spot Instances: Use spot instances to significantly reduce compute costs. Spot instances offer spare compute capacity at a discount.

- Implement Job Prioritization: Implement job prioritization based on urgency and importance. This helps to ensure that critical jobs are completed quickly.

- Utilize AWS CloudWatch: Use AWS CloudWatch to monitor job performance. Monitor resource utilization. Analyze logs.

- Regularly Update Job Definitions: Periodically update job definitions to incorporate new features and enhancements. Reviewing and updating the job definitions can ensure that the jobs take advantage of any new features.

Adopting these optimization techniques will result in a robust, efficient, and cost-effective RemoteIoT batch processing environment.

Best Practices for AWS Batch

Effective management of AWS Batch jobs requires a set of best practices. By following these practices, you can ensure the efficiency and reliability of your data processing workflows.

Plan Your Workload

Careful planning is crucial for efficiently utilizing AWS Batch. A proactive approach helps allocate resources effectively and prevents unnecessary expenses.

Monitor and Debug

Consistently monitor your batch jobs using AWS CloudWatch and other monitoring tools. This will allow you to promptly identify and resolve any issues, ensuring that your jobs run as intended without disruptions.

Secure Your Data

Implement strong security measures to safeguard your data during batch processing. Use AWS Identity and Access Management (IAM) to control access to your resources and encrypt sensitive information with AWS Key Management Service (KMS).

Security Considerations

Prioritizing security is crucial to maintaining the integrity and confidentiality of your data. Implement robust security measures to protect your data and applications.

- Utilize IAM: Implement IAM roles and policies to restrict unauthorized access to AWS resources. By using IAM roles, you can assign specific permissions to the resources used by your batch jobs.

- Encrypt Data: Encrypt data both in transit and at rest using AWS encryption services. When data is at rest (stored), encryption ensures that it is protected.

- Audit Security Settings: Regularly audit your security settings and update them as needed to address evolving threats. Monitor your security settings to ensure they meet your organization's security standards.

By prioritizing security, you can safeguard your RemoteIoT applications against potential risks and vulnerabilities.

Real-World Use Cases

RemoteIoT batch job processing in AWS has diverse applications across various industries. Here are some practical examples:

- Healthcare: Process data from medical devices to monitor patient health and detect anomalies. This involves collecting and processing data from various medical devices.

- Manufacturing: Analyze sensor data to optimize production processes and improve efficiency. Data from sensors on equipment can be analyzed to identify inefficiencies.

- Energy: Monitor energy consumption patterns to enhance resource management and sustainability. Data from smart meters, sensors, and other devices can be collected and analyzed to optimize energy usage.

These use cases highlight the versatility and power of AWS Batch in handling complex RemoteIoT data processing tasks across different sectors.

To summarize, RemoteIoT batch job processing in AWS provides a scalable and efficient solution for managing large-scale data processing tasks. It provides the ability to streamline workflows and extract valuable insights from IoT data.

AWS Batch Documentation: https://docs.aws.amazon.com/batch/

AWS IoT Documentation: https://docs.aws.amazon.com/iot/

AWS Security Best Practices: https://aws.amazon.com/security/best-practices/

- Stray Kids A Comprehensive Guide To The Kpop Phenomenon

- Stray Kids From Survival Show To Global Kpop Icons

AWS Batch Implementation for Automation and Batch Processing

AWS Batch Implementation for Automation and Batch Processing

Aws Batch Architecture Hot Sex Picture